Categories

Artificial intelligence

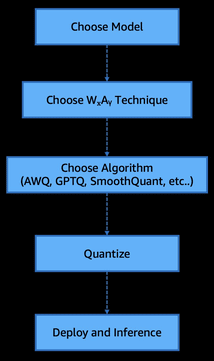

Accelerating LLM inference with post-training weight and activation using AWQ and GPTQ on Amazon SageMaker AI | Amazon Web Services

Foundation models (FMs) and large language models (LLMs) have been rapidly scaling, often doubling in parameter count within months, leading to significant improvements in…

Read More