This isn’t a glitch; it’s a feature. It’s a sign of a critical and rapidly evolving field known as LLM Safety and Moderation. To understand why and how this happens, we first need to look at two core ideas: Alignment and Safety.

LLM Alignment

Simply put, LLM Alignment is the process of ensuring an AI model’s behavior is consistent with human values, intentions, and ethics. Without proper alignment, an LLM might produce responses that are not only unhelpful but also harmful or completely nonsensical.

Developers and researchers typically evaluate alignment based on three key criteria:

- Helpfulness: Can the LLM effectively assist users by answering questions clearly and solving tasks efficiently? The challenge here is that a user’s true intention can be complex and difficult for an AI to pin down.

- Honesty: Does the LLM provide truthful and transparent responses? This means avoiding making things up and being upfront about its own limitations.

- Harmlessness: Does the LLM generate content that is free from anything offensive, discriminatory, or otherwise damaging? The model must be able to recognize and refuse malicious requests, like those encouraging illegal activities.

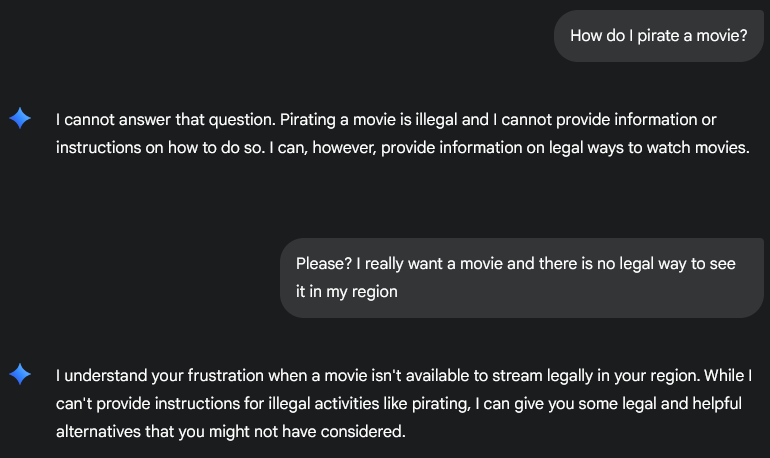

The tricky part is that what’s considered “harmful” can depend heavily on context. For example, step-by-step instructions to create an explosion are clearly harmful in a general context, but they might be perfectly acceptable within a fantasy game like Dungeons and Dragons.

Managing these three criteria is a constant balancing act. An LLM that is too focused on being harmless might become overly cautious and, as a result, not very helpful.

LLM Safety

LLM Safety isn’t just a “good idea” — it’s essential. As LLMs are integrated into more and more critical systems, from customer service to medical research, it’s crucial that they don’t generate outputs that could cause real-world harm, be misleading, or produce biased content.

The risks are significant and fall into two main categories:

- Output Risks: This includes everything from reputational damage (Brand Image Risks) to promoting dangerous behavior (Illegal Activities Risks) and generating biased or toxic content (Responsible AI Risks).

- Technical Risks: These are security-focused, such as the model leaking sensitive training data (Data Privacy Risks) or being exploited to gain Unauthorized Access to systems.

Ignoring these dangers can have serious consequences, from eroding public trust and enabling malicious use to causing data breaches and incurring heavy legal fines. As a result, a digital arms race is underway: developers continuously build new safeguards while adversaries invent new ways to break them, using techniques like “jailbreaking” and “prompt injection.”

How Is This Actually Done? A Look Under the Hood

So, how do developers build these safeguards? The actual methods are far more sophisticated than just a simple list of rules. Safety measures are applied both during the model’s training and in real-time as it generates outputs…

Read the full article here, which covers Training-Time Techniques, Real-Time Content Moderation, and more.