[ad_1]

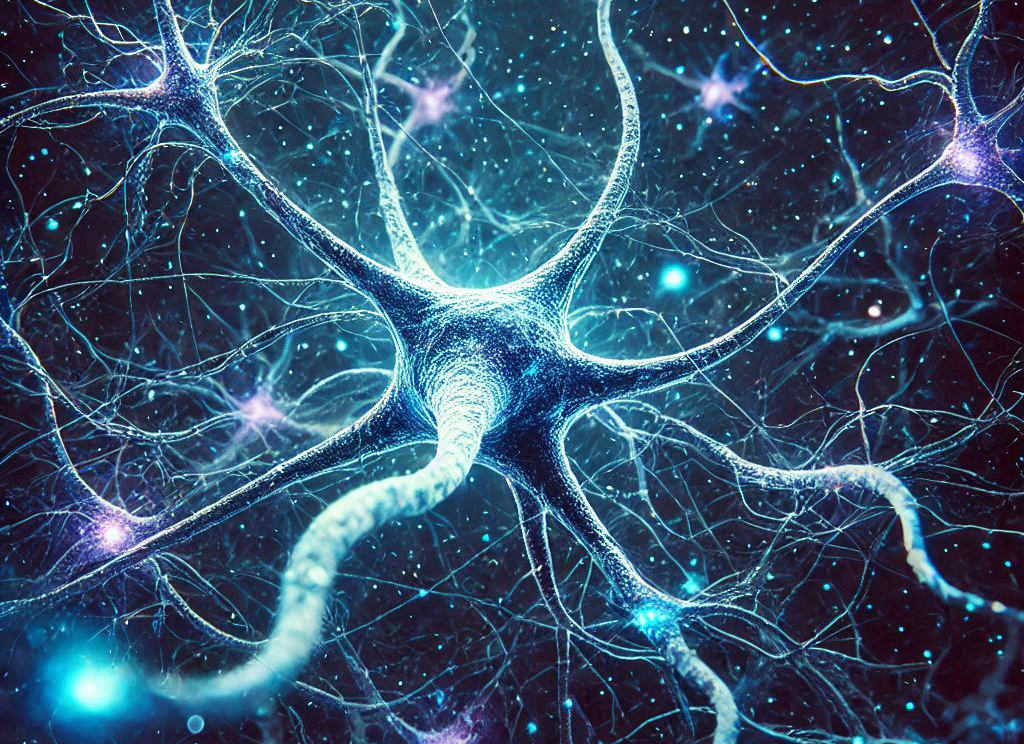

The math behind neural networks visually explained

20 hours ago

Artificial neural networks are the most powerful and at the same time the most complicated machine learning models. They are particularly useful for complex tasks where traditional machine learning algorithms fail. The main advantage of neural networks is their ability to learn intricate patterns and relationships in data, even when the data is highly dimensional or unstructured.

Many articles discuss the math behind neural networks. Topics like different activation functions, forward and backpropagation algorithms, gradient descent, and optimization methods are discussed in detail. In this article, we take a different approach and present a visual understanding of a neural network layer by layer. We will first focus on the visual explanation of single-layer neural networks in both classification and regression problems and their similarities to other machine learning models. Then we will discuss the importance of hidden layers and non-linear activation functions. All the visualizations are created using Python.

All the images in this article were created by the author.

Neural networks for classification

[ad_2]