[ad_1]

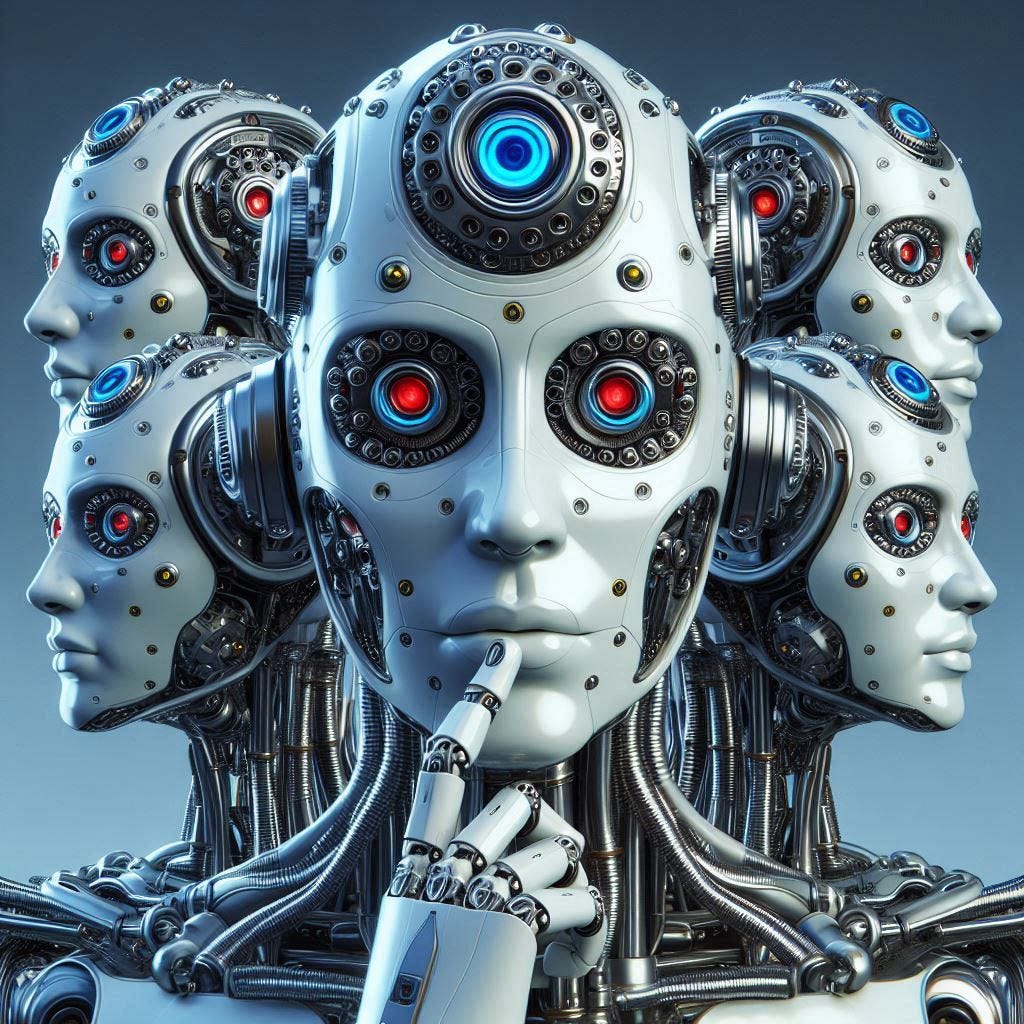

A comprehensive and detailed formalisation of multi-head attention

Multi-head attention plays a crucial role in transformers, which have revolutionized Natural Language Processing (NLP). Understanding this mechanism is a necessary step to getting a clearer picture of current state-of-the-art language models.

[ad_2]