Instead of a petabyte-scale data dump, they trained their first model, phi-1, on a tiny, curated dataset. It was composed of two things: heavily filtered, high-quality code from the web, and, crucially, synthetic “textbooks” generated by a larger AI.

This is the “Aha!” moment. The Master Chef isn’t just teaching the Apprentice in person anymore. He’s using his vast knowledge to write the perfect cookbook. This “textbook-quality” data is clean, clear, and pedagogically structured. It’s the difference between learning a language by reading every unhinged comment on Reddit versus taking a perfectly designed Duolingo course.

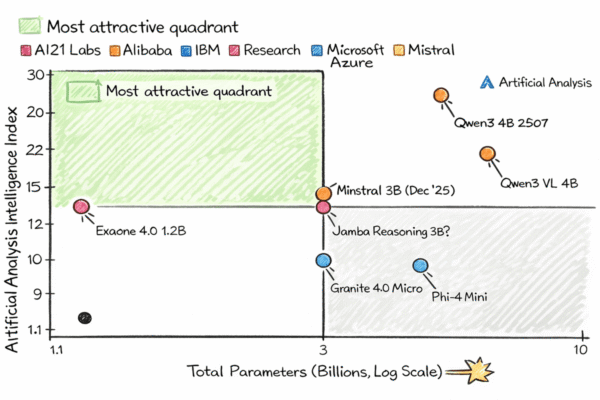

The results were insane. The 1.3 billion-parameter phi-1, trained on a tiny dataset, outperformed models ten times its size on coding tasks (Gunasekar et al., 2023). Its successors, Phi-2 and Phi-3, extended this principle to general reasoning, proving that a smaller model fed a five-star diet can outperform a giant model fed on junk food (Javaheripi et al., 2023; Microsoft Azure AI team, 2024).

This virtuous cycle is supercharged by architectural improvements in open models like Meta’s Llama 3. With a bigger tokenizer (encoding language more efficiently) and Grouped Query Attention (a more efficient mental “kitchen layout”), these models provide the perfect, sturdy foundation for these advanced teaching and data-curation techniques to shine (Meta AI team, 2024; Ainslie et al., 2023).