Image by Editor

#Introduction

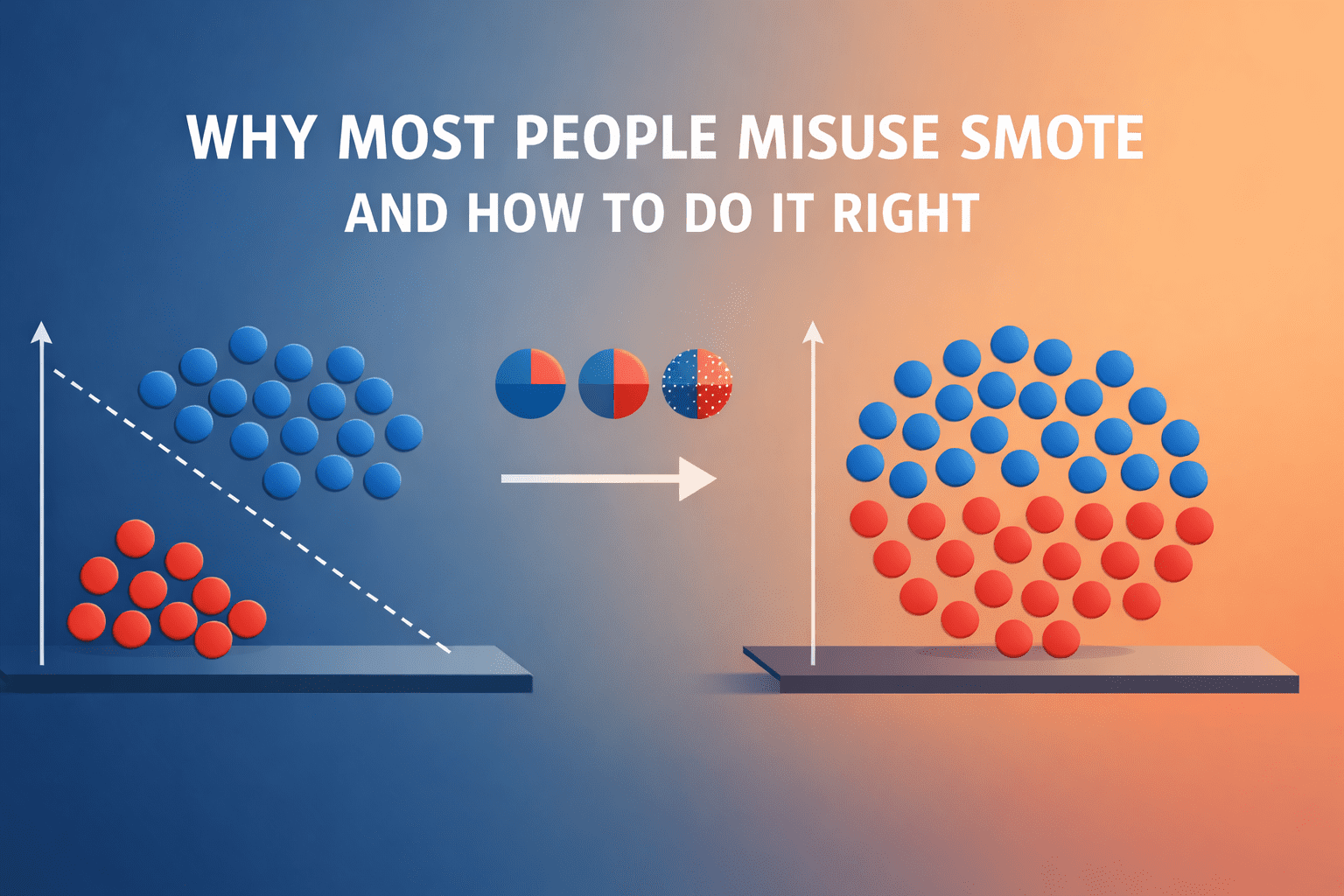

Getting labeled data — that is, data with ground-truth target labels — is a fundamental step for building most supervised machine learning models like random forests, logistic regression, or neural network-based classifiers. Even though one major difficulty in many real-world applications lies in obtaining a sufficient amount of labeled data, there are times when, even after having checked that box, there might still be one more important challenge: class imbalance.

Class imbalance occurs when a labeled dataset contains classes with very disparate numbers of observations, usually with one or more classes vastly underrepresented. This issue often gives rise to problems when building a machine learning model. Put another way, training a predictive model like a classifier on imbalanced data yields issues like biased decision boundaries, poor recall on the minority class, and misleadingly high accuracy, which in practice means the model performs well “on paper” but, once deployed, fails in critical cases we care about most — fraud detection in bank transactions is a clear example of this, with transaction datasets being extremely imbalanced due to about 99% of transactions being legitimate.

Synthetic Minority Over-sampling Technique (SMOTE) is a data-focused resampling technique to tackle this issue by synthetically generating new samples belonging to the minority class, e.g. fraudulent transactions, via interpolation techniques between existing real instances.

This article briefly introduces SMOTE and subsequently puts the lens on explaining how to apply it correctly, why it is often used incorrectly, and how to avoid these situations.

#What SMOTE is and How it Works

SMOTE is a data augmentation technique for addressing class imbalance problems in machine learning, specifically in supervised models like classifiers. In classification, when at least one class is significantly under-represented compared to others, the model can easily become biased toward the majority class, leading to poor performance, especially when it comes to predicting the rare class.

To cope with this challenge, SMOTE creates synthetic data examples for the minority class, not by just replicating existing instances as they are, but by interpolating between a sample from the minority class and its nearest neighbors in the space of available features: this process is, in essence, like effectively “filling in” gaps in regions around which existing minority instances move, thus helping balance the dataset as a result.

SMOTE iterates over each minority example, identifies its ( k ) nearest neighbors, and then generates a new synthetic point along the “line” between the sample and a randomly chosen neighbor. The result of applying these simple steps iteratively is a new set of minority class examples, so that the process to train the model is done based on a richer representation of the minority class(es) in the dataset, and resulting in a more effective, less biased model.

How SMOTE works | Image by Author

#Implementing SMOTE Correctly in Python

To avoid the data leakage issues mentioned previously, it is best to use a pipeline. The imbalanced-learn library provides a pipeline object that ensures SMOTE is only applied to the training data during each fold of a cross-validation or during a simple hold-out split, leaving the test set untouched and representative of real-world data.

The following example demonstrates how to integrate SMOTE into a machine learning workflow using scikit-learn and imblearn:

from imblearn.over_sampling import SMOTE

from imblearn.pipeline import Pipeline

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import classification_report

# Split data into training and testing sets first

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

# Define the pipeline: Resampling then modeling

# The imblearn Pipeline only applies SMOTE to the training data

pipeline = Pipeline([

('smote', SMOTE(random_state=42)),

('classifier', RandomForestClassifier(random_state=42))

])

# Fit the pipeline on training data

pipeline.fit(X_train, y_train)

# Evaluate on the untouched test data

y_pred = pipeline.predict(X_test)

print(classification_report(y_test, y_pred))By using the Pipeline, you ensure that the transformation happens within the training context only. This prevents synthetic information from “bleeding” into your evaluation set, providing a much more honest assessment of how your model will handle imbalanced classes in production.

#Common Misuses of SMOTE

Let’s look at three common ways SMOTE is misused in machine learning workflows, and how to avoid these wrong uses:

- Applying SMOTE before partitioning the dataset into training and test sets: This is a very common error that inexperienced data scientists may frequently (and in most cases accidentally) incur. SMOTE generates new synthetic examples based on all the available data, and injecting synthetic points in what will later be both the training and test partitions is the “not-so-perfect” recipe to artificially inflate model evaluation metrics unrealistically. The right approach is simple: split the data first, then apply SMOTE only on the training set. Thinking of applying k-fold cross-validation as well? Even better.

- Over-balancing: Blindly resampling until there is an exact match among class proportions is another common error. In many cases, achieving that perfect balance is not only unnecessary but can also be counterproductive and unrealistic given the domain or class structure. This is particularly true in multiclass datasets with several sparse minority classes, where SMOTE may end up creating synthetic examples that cross the boundaries or lie in regions where no real data examples are found: in other words, noise may be inadvertently introduced, with possible undesired consequences like model overfitting. The general approach is to act gently and try training your model with subtle, incremental rises in minority class proportions.

- Ignoring the context around metrics and models: The overall accuracy metric of a model is an easy and interpretable metric to obtain, but it can also be a misleading and “hollow metric” that does not reflect your model’s inability to detect cases of the minority class. This is a critical issue in high-stakes domains like banking and healthcare, with scenarios like the detection of rare diseases. Meanwhile, SMOTE can help improve the reliance on metrics like recall, but it can decrease its counterpart, precision, by introducing noisy synthetic samples that may misalign with business goals. To properly evaluate not only your model, but also SMOTE’s effectiveness in its performance, jointly focus on metrics like recall, F1-score, Matthews correlation coefficient (MCC, a “summary” of a whole confusion matrix), or precision-recall area under the curve (PR-AUC). Likewise, consider alternative strategies like class weighting or threshold tuning as part of the application of SMOTE to further enhance effectiveness.

#Concluding Remarks

This article revolved around SMOTE: a commonly used technique to address class imbalance in building some machine learning classifiers based on real-world datasets. We identified some common misuses of this technique and practical advice to try avoiding them.

Iván Palomares Carrascosa is a leader, writer, speaker, and adviser in AI, machine learning, deep learning & LLMs. He trains and guides others in harnessing AI in the real world.